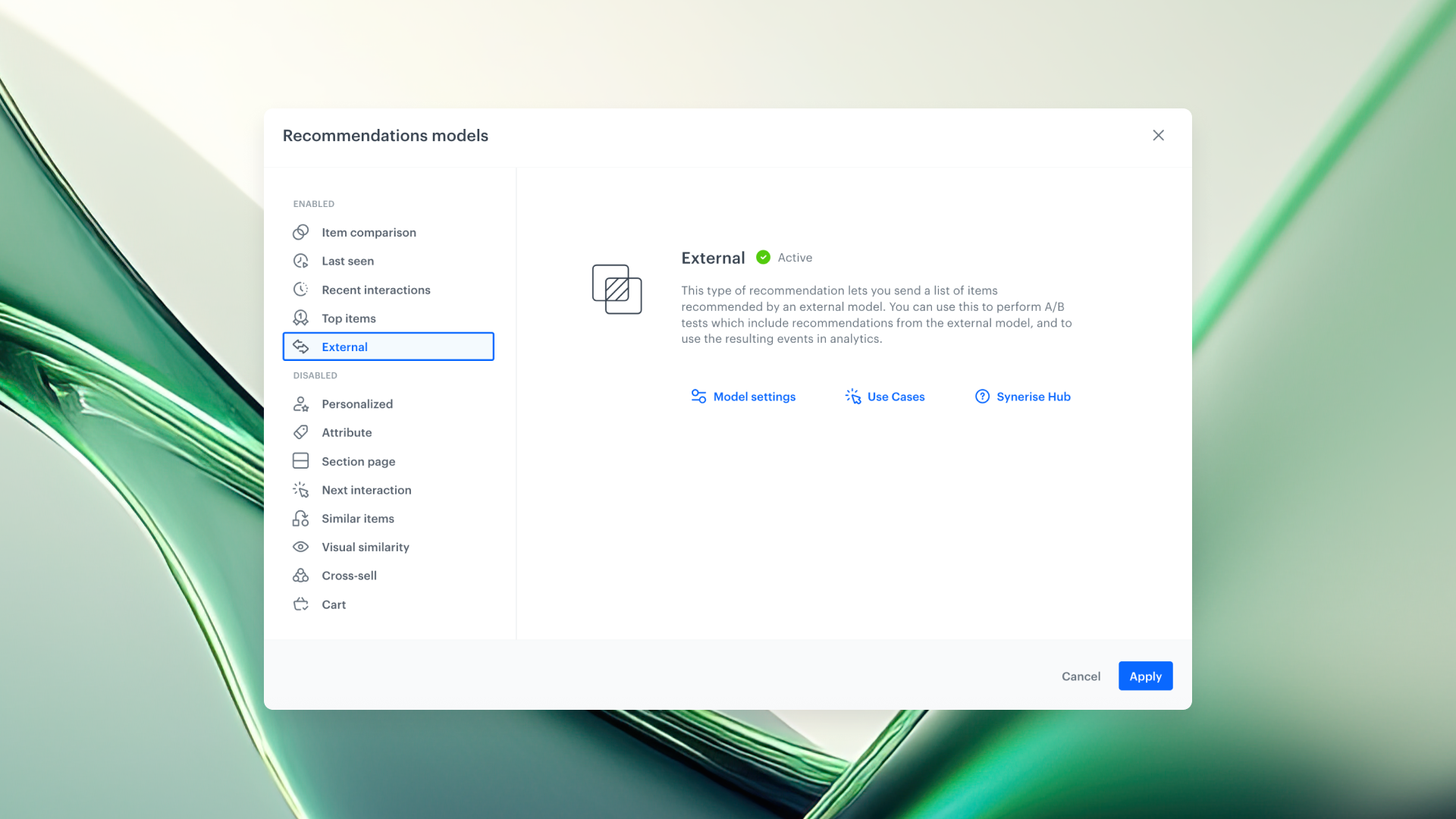

This model lets you declare item IDs recommended by an external model and generate an event same as if the recommendation was calculated by Synerise. These items must exist in the item feed. You can use this to perform A/B/X tests which include recommendations from the external model, and to use the resulting events in analytics.

Because the recommended items are explicitly provided in the request, the context has no influence on them. The customer context is only needed to assign an event to a profile.

We’ve added support for External recommendation variants in A/B/X testing—making it possible to compare recommendations generated by outside systems (like custom ML engines or third-party tools) with native Synerise models.

This means you no longer need to test externally or compare results manually. Just plug in your external item IDs, run the experiment, and let Synerise track and evaluate performance across all variants. The entire test flow—including event generation and analytics—is preserved, regardless of where the logic comes from.

The setup is simple:

externalItemId or recommendedItemsFromExternalModel).

If you don’t provide the item IDs when the external variant is active, no recommendation is returned, and no event is generated. Just resend the request with valid item IDs, and the system will log the recommendation as usual.

This feature makes it easy to test experimental models, validate LLM-based suggestions, or benchmark third-party tools in your existing optimization workflows—without losing visibility or control.

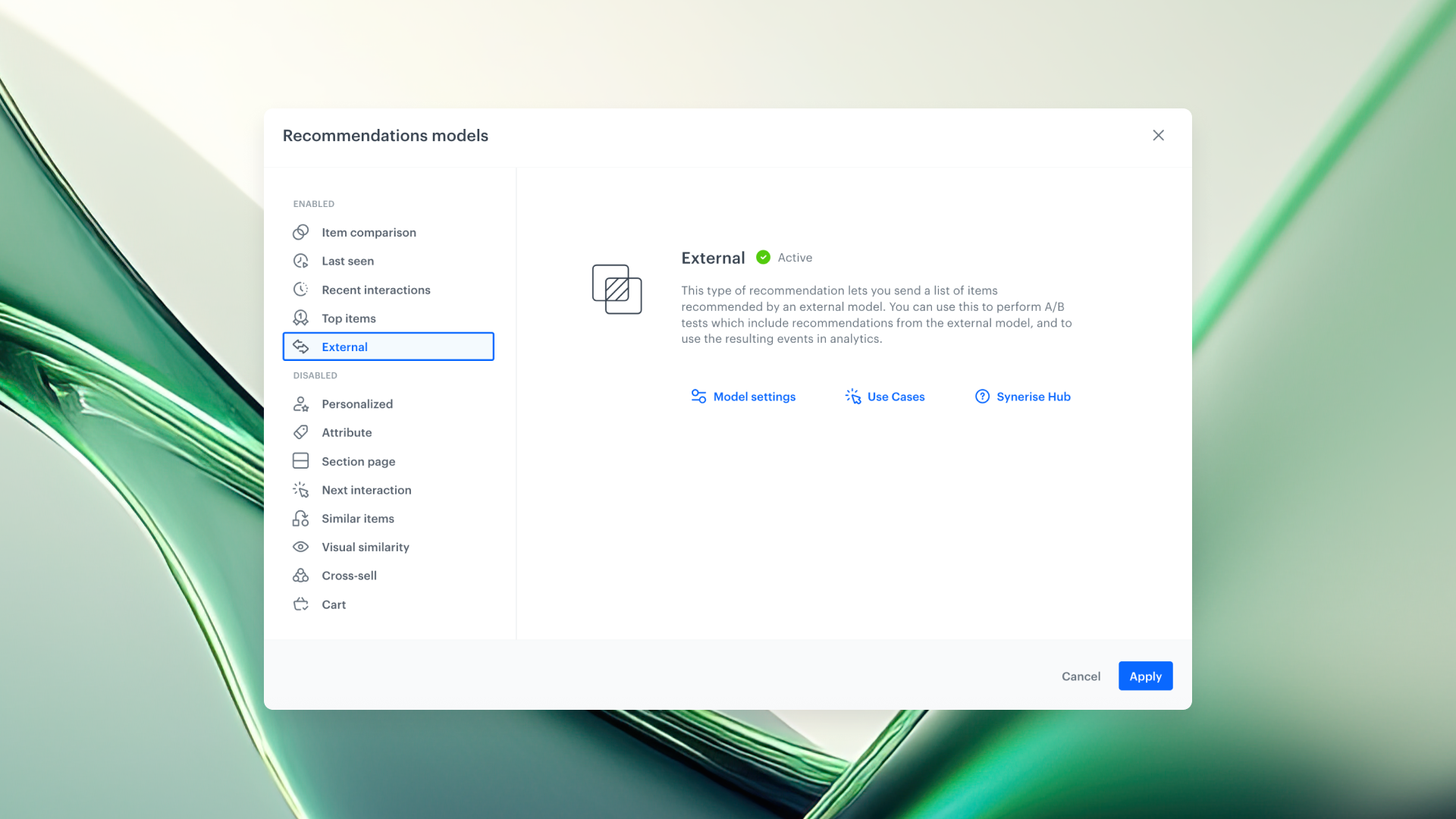

This model lets you declare item IDs recommended by an external model and generate an event same as if the recommendation was calculated by Synerise. These items must exist in the item feed. You can use this to perform A/B/X tests which include recommendations from the external model, and to use the resulting events in analytics.

Because the recommended items are explicitly provided in the request, the context has no influence on them. The customer context is only needed to assign an event to a profile.

We’ve added support for External recommendation variants in A/B/X testing—making it possible to compare recommendations generated by outside systems (like custom ML engines or third-party tools) with native Synerise models.

This means you no longer need to test externally or compare results manually. Just plug in your external item IDs, run the experiment, and let Synerise track and evaluate performance across all variants. The entire test flow—including event generation and analytics—is preserved, regardless of where the logic comes from.

The setup is simple:

externalItemId or recommendedItemsFromExternalModel).

If you don’t provide the item IDs when the external variant is active, no recommendation is returned, and no event is generated. Just resend the request with valid item IDs, and the system will log the recommendation as usual.

This feature makes it easy to test experimental models, validate LLM-based suggestions, or benchmark third-party tools in your existing optimization workflows—without losing visibility or control.